Navigating Kubernetes Informers

The Silent Watchers of Your Cluster

Imagine you’re tasked with monitoring a massive distributed Kubernetes-based e-commerce platform during Black Friday. In this high-stake environment, how do you keep track of all these moving parts? You could poll periodically, but that is inefficient and you would miss critical data between polls. This is where informers come to the rescue! They provide a robust and efficient way to track and react to changes in your cluster in near real-time.

What are Informers, Really?

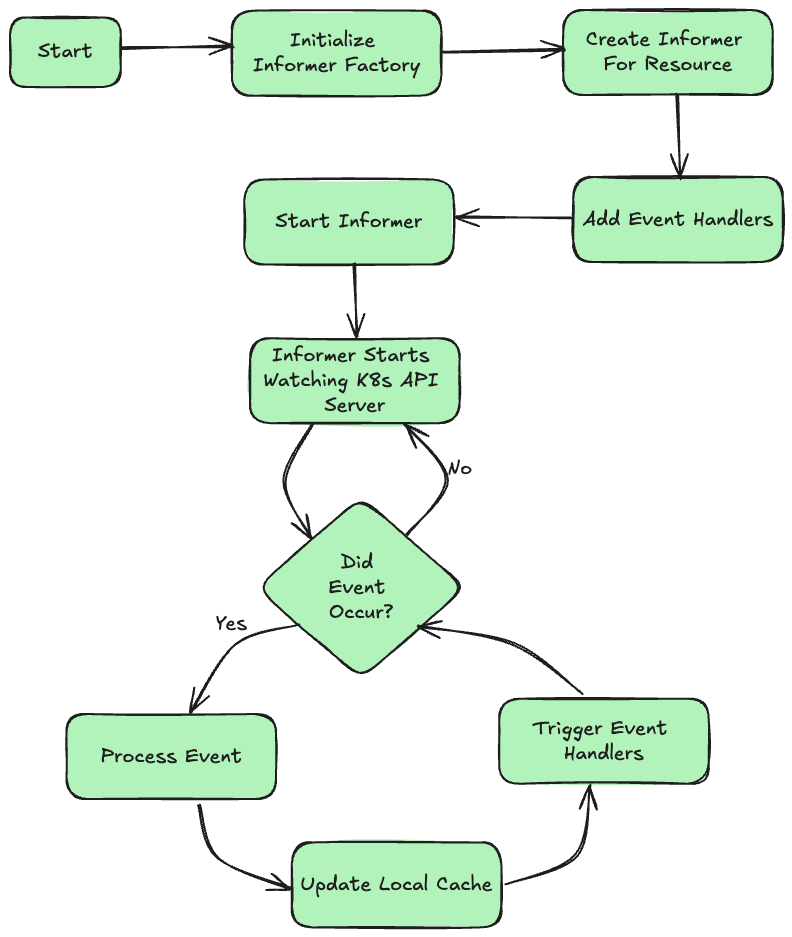

At their core, Informers is a caching and notification mechanism between your application logic and the Kubernetes API server. They watch for changes to resources like pods, services, and deployments, handle events, and maintain a local cache. They can react to changes in objects nearly in real-time instead of requiring polling requests reducing API server load.

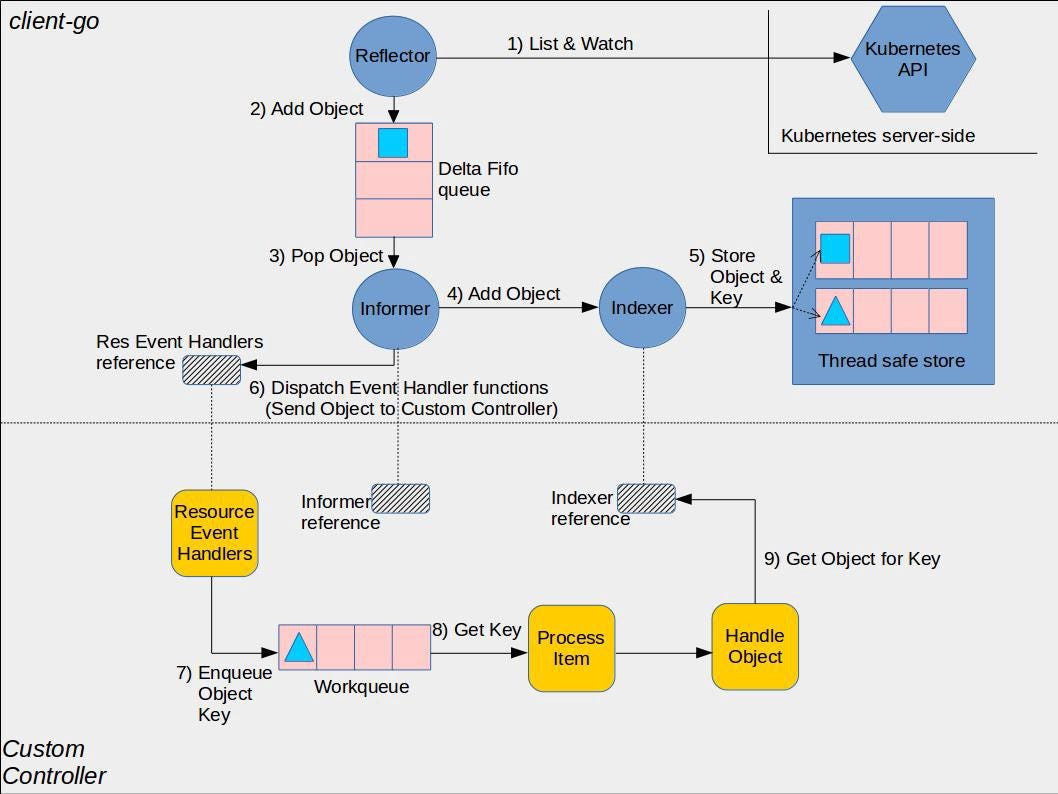

https://github.com/kubernetes/sample-controller/blob/master/docs/images/client-go-controller-interaction.jpeg

Key components of Informers

Let's break down the Informer squad and see who does what in this well-oiled machine:

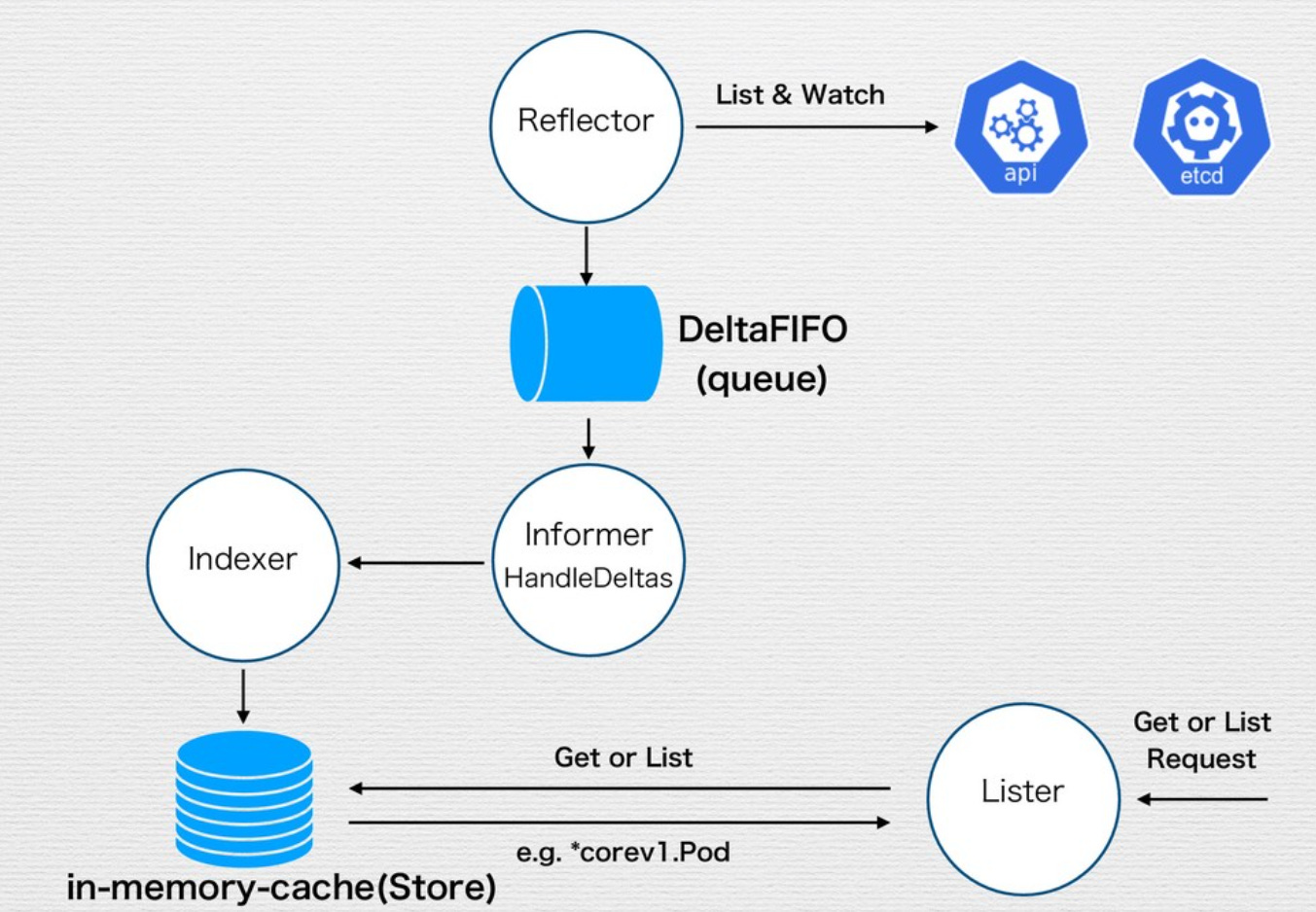

Reflector: The Reflector continuously synchronizes the local cache with the Kubernetes API server by listing and watching the resource objects. It ensures that the local cache reflects the current state of the resources in the cluster.

DeltaFIFO: It is a thread-safe Queue of collections of Delta events. Delta is the type stored by DeltaFIFO and tells us what changed.

Store: By caching resource data locally, Informers reduce the need for frequent API calls to the Kubernetes server, which helps decrease the load on the server and reduces latency.

Indexer: The Indexer stores the cached resource objects and provides indexing and querying capabilities. It allows quick access to the resources based on various keys, such as resource names or labels.

Lister: The Lister provides a read-only view of the objects stored in the Indexer. It is used to list and get resources efficiently from the cache.

How it all works together

The Reflector watches the K8s API server and sends updates to DeltaFIFO.

DeltaFIFO processes updates and adds them to the store.

The indexer creates indexes in the Store for efficient querying.

The Lister provides a read-only view of the data in the Store.

EventHandlers are invoked when changes occur in the store.

Informers vs Watches

Watches: It is a lower-level API provided by Kubernetes that allows you to watch for changes to a specific resource type in real-time. They offer stream events(add, delete, update) for resources. It is edge-based, meaning you will be notified of changes as they happen.

Informers: It is a higher-level abstraction on top of watches. It has advanced error behavior, for example, if there is network failure and a long-running watch connection breaks it recovers by re-list and re-watch to hide such failures from the caller.

How `controller-runtime` Uses Informers

The `controller-runtime` library simplifies building the controllers by abstracting the complexity of setting up watches and informers.

Manager and Cache

When you create the manager, it sets up a shared cache backed by informers. This cache helps the controller access K8s objects and reduces redundant calls to the API server.

mgr, err := ctrl.NewManager(ctrl.GetConfigOrDie(), ctrl.Options{})Controller Setup

Here, the controller behind the scenes sets up the informers to watch for the changes to the resources it is interested in.

err := ctrl.NewControllerManagedBy(mgr).

For(&appsv1.Deployment{}).

Complete(r)Reconciliation Loop

The informer watches for changes to the resources and updates its local cache accordingly. It notifies the controller of changes, which in turn triggers the `Reconciler`.

Implementing Informers

Kubernetes programming interface in Go mainly consists of the `k8s.io/client-go` library. Assuming you have access to the k8s cluster you can use `client-go` to access its resources.

Creating a Single Informer

In the example below, we have created a single informer, in the default namespace and it watches for resources of Kind Pod.

// Create a shared informer

informerFactory := informers.NewSharedInformerFactory(clientSet, 30*time.Second)

podInformer := informerFactory.Core().V1().Pods().Informer()There are cases where you want more control when creating that informerFactory. For example, you only want to monitor the resources in a given namespace or even dive a bit deeper care about resources with a particular Label. In this, case you want to use `NewFilteredSharedInformerFactory`.

// Create a filtered shared informer

informerFactory := informers.NewFilteredSharedInformerFactory(

clientSet,

30*time.Second,

"my-namespace",

func(lo *v1.ListOptions) {

lo.LabelSelector = "my-label"

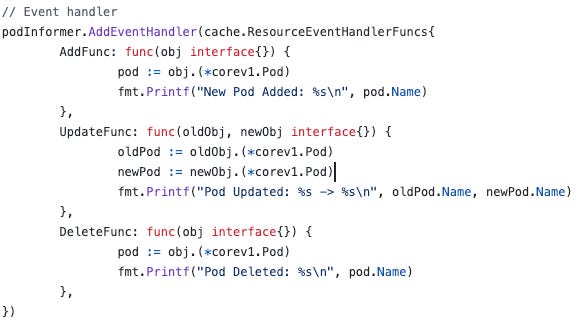

})Now that we have created a new informer, we need to register handler functions for add, update, and delete events. These are done by `AddEventHandler`, which adds the event to the shared informer using the resync period, and there is no coordination between the handlers.

https://github.com/breathOfTech/informers/blob/main/pod-informer/main.go#L50

Multi-Resource Informers

You saw that the example was for a pod, where we monitored the changes in them. But you can also use the same informerFactory to monitor multiple resource types. Here’s an example of a controller that watches Deployments, Services, and Pods.

Struct for Multiple Resource

Firstly create a multi-resource controller struct that consists of a shared informer factory and any other resource informer that you want to keep track of.

type MultiResourceController struct {

informerFactory informers.SharedInformerFactory

deployInformer appsinformers.DeploymentInformer

serviceInformer coreinformers.ServiceInformer

podInformer coreinformers.PodInformer

}Initialization

Initialize the controller, where multiple controllers share the same watch connections and caches reducing the load on the API server.

func NewMultiResourceController(informerFactory informers.SharedInformerFactory) (*MultiResourceController, error) {

deployInformer := informerFactory.Apps().V1().Deployments()

serviceInformer := informerFactory.Core().V1().Services()

podInformer := informerFactory.Core().V1().Pods()

c := &MultiResourceController{

informerFactory: informerFactory,

deployInformer: deployInformer,

serviceInformer: serviceInformer,

podInformer: podInformer,

}

// Event handlers are added here

// ...

return c, nil

}Setting up event handlers

These event handlers are our ears to our controller. They listen to the addition, updates, and deletion of resources, allowing us to make logical decisions in real-time.

_, err := deployInformer.Informer().AddEventHandler( cache.ResourceEventHandlerFuncs{ AddFunc: c.deploymentAdd, UpdateFunc: c.deploymentUpdate, DeleteFunc: c.deploymentDelete, }, ) // Similar handlers are added for services and podsRunning the controller

This is where the magic happens! It starts the informer factory, which in turn starts all the individual informers.

func (c *MultiResourceController) Run(stopCh <-chan struct{}) error { c.informerFactory.Start(stopCh) if !cache.WaitForCacheSync(stopCh, c.deployInformer.Informer().HasSynced, c.serviceInformer.Informer().HasSynced, c.podInformer.Informer().HasSynced) { return fmt.Errorf("failed to sync") } return nil }Main Func

Finally, we tie everything together in the main function. Here, we create the shared informer factory, initialize our controller, and set it to running the `select {}` keeps the program running indefinitely and allows our controller to continuously monitor and respond to the cluster events.

func main() { // ... (client setup omitted for brevity) factory := informers.NewSharedInformerFactory(clientset, time.Hour*24) controller, err := NewMultiResourceController(factory) if err != nil { klog.Fatalf("Error creating controller: %s", err.Error()) } stop := make(chan struct{}) defer close(stop) err = controller.Run(stop) if err != nil { klog.Fatalf("Error running controller: %s", err.Error()) } select {} }

The handling logic is customizable based on your specific requirements. This post demonstrates how to use a single informerFactory to monitor multiple resource types. You can view the complete code here.

Gotchas

Don’t update the informer’s cache’s resource: If you mutate it, you are at risk of hard-to-debug cache issues in your application. Informers and listers own objects they return, hence do a deep copy before mutation.

Lag: Informers operate asynchronously, which means there can be a delay between changes in the cluster and updates to the local cache. For example, controller reads pods status from informer cache, and updates the pod based on the information, but the pods actual state in the cluster has changed since the cache was last updated. Controllers update based on seeing the cache might overwrite recent changes or make decision based on outdated information.

Conclusion

In practice, you want to focus on your application's core logic while leveraging battle-tested implementations of watch and cache mechanisms. Leveraging `controller-runtime` when building your controllers and operators will benefit from using higher-level abstractions, which internally use informers and hide their complexity.

However, understanding how informers work "under the hood" is valuable. It enables you to make informed decisions about your application's architecture and is crucial when optimizing performance or implementing advanced patterns.

Hopefully, this post has provided you with enough insight into informers.