Keeping Your Kubernetes Operators Under Control

Understanding Rate Limiters in Reconcilers

Imagine you're a waiter in a busy restaurant. Customers (Kubernetes resources) are constantly raising their hands (events) and requesting service (reconciliation). You (the operator) do your best to fulfill their needs, but if everyone yells for attention at once, chaos arises. This is where rate limiters come in, acting as a virtual "ticket system" for your operator's reconciler loop.

What is a Kubernetes Operator?

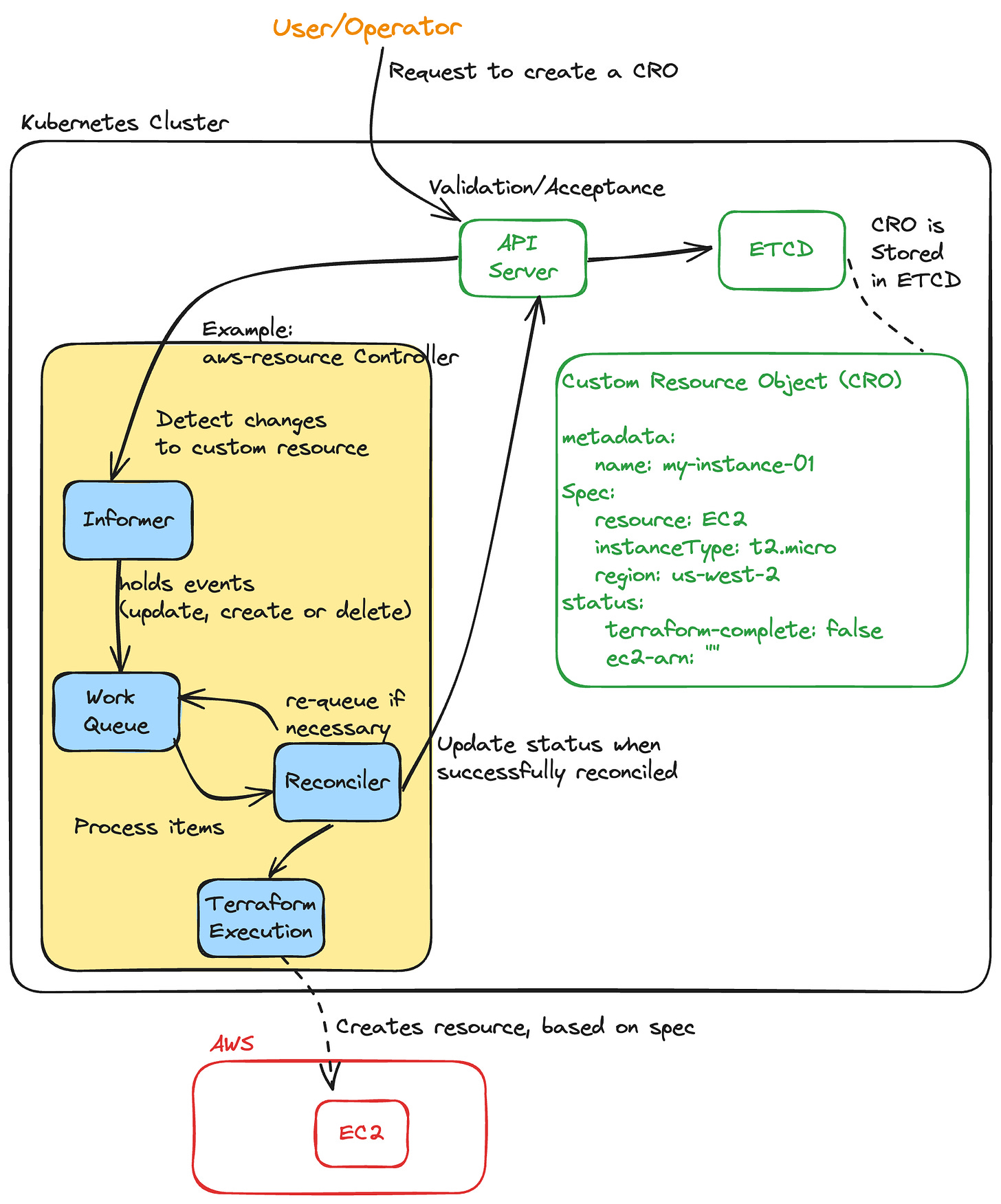

Operators are software extensions to Kubernetes that make use of custom resources to manage applications and their components. Operators follow Kubernetes principles, notably the control loop.

Custom Resource (CR): Kubernetes recognizes its native resources like POD, Services etc, Think of Custom Resource Definition(CRD) as a custom schema that is defined by you that Kubernetes understands. Custom Resource Objects (CRO’s) are the instances of these custom schemas or CRD’s.

Custom Controller: The custom resources wouldn’t be monitored by the K8s by themselves; you need to write a controller that monitors these objects and take decisions when they have been changed.

In essence, it is a custom controller that manages the lifecycle of your custom resource. You specify the desired state in the CustomResource’s Spec, the controller monitors this object and takes action(reconciliation) when necessary.

When adding the custom resources and controllers, you can still leverage the whole Kubernetes ecosystem. For example: CRDs and CRO’s are persistent in etcd a key-value store which maintains cluster’s state, RBAC policies, leverage existing Kubernetes monitoring and logging solution etc.

The Reconciler: Heart of the Operator

In robotics and automation, a control loop is a non-terminating loop that regulates the state of a system.

The core component of an operator is the reconciliation loop, it constantly watches the custom resource for any changes like add, delete and update and triggers reconciliation whenever a change is detected.

Add: Ensure that the system is idempotent and ensure no new resources are created.

Update: We have to be a little careful for update as the update on the Spec is what causes reconciliation while updates to other fields, like status or annotations, do not.

Delete: Use finalizers should be used in cases where custom resources may require cleanup action before deletion. For example the custom resource is associated with amazon web service’s resource and those need to be deleted prior to deleting the custom resource.

Kubernetes Operator Rate Limiters: Diving Deeper

Let’s say you have a controller, aws-resource-controller based on the example diagram above, that brings up aws resources based on the CR’s spec. Now you have a requirement where you need to update the instance type and you send this patch to all the CRO’s. The controller has Informers to keep track of the resources, and when the object updates it does a callback to put the changed object into the WorkQueue. WorkQueue supports several type os queuing techniques, Rate Limit Queue being one of them. A rate limiter acts as a gatekeeper for your reconciler loop. While reconciler ensure that the desired state is maintained, they can be resource-intensive the operation can be more complex like chain of actions instead of running a terraform to bring up an EC2. It introduces a delay or backoff mechanism, ensuring resources are reconciled at a controlled pace preventing overloading of the system.

Supported Rate Limiter Types:

Exponential Failure Rate Limiter (ExponentialFailureRateLimiter)

Token Bucket Rate Limiter (BucketRateLimiter)

Fast Slow Rate Limiter (FastSlowRateLimiter)

Hybrid Rate limiter (MaxOfRateLimiter)

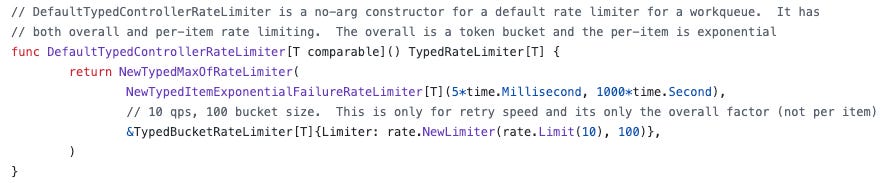

Default Rate Limiting Behavior:

Default implementation of rate limiter in controller-runtime, borrowed from client-go, combines an exponential failure rate limiter and a token bucket rate limiter providing a balanced approach to handling retries in k8s controllers.

Exponential Failure Rate Limiter:

The limiter increases the re-try interval exponentially with each failure for individual items.

Initial Interval: 5 milliseconds

Maximum interval: 1000 seconds

Token Bucket Rate Limiter:

This limiter controls the overall rate at which items can be processed, smoothing out bursts and ensuring a consistent rate of retries.

Rate: 10 requests per second

Bucket size 100 tokens

The token bucket rate limiter ensures that the overall rate of retires does not exceed 10 requests per second, with a burst capacity of up to 100 tokens. This means that even if the exponential rate limiter schedules a retry for an item, the token bucket limiter will delay it if the over all rate exceeds the limit.

You can also, change the values of the rate-limiter as per your use-case. Maybe you don’t want to retry after 5ms. In that case you can do the following:

func newCustomRateLimiter() workqueue.RateLimiter {

return workqueue.NewMaxOfRateLimiter(

workqueue.NewItemExponentialFailureRateLimiter(20*time.Second, 1000*time.Second),

&workqueue.BucketRateLimiter{Limiter: rate.NewLimiter(rate.Limit(20), 100)},

)

}

// SetupWithManager sets up the controller with the Manager.

func (r *ExampleReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&baniyapratikv1alpha1.Example{}).WithOptions(controller.Options{

RateLimiter: newCustomRateLimiter(),

}).

Complete(r)

}controller-runtime’s NewControllerManagedBy helps initialize a new controller that will be managed by `ctrl.mgr`, the manager is responsible for managing lifecycle of the controller, where we can specify the rate-limiter we want to use and the resource we want to keep track of and in our case its resource type `Example`.

Example scenario of item failure handling:

ItemExponentialFailureRateLimiter does a simple baseDelay*2^<num-failures> limit

The first time item fails to reconcile it is scheduled for a retry after 5 ms (5ms * 2^0), if it fails again, it is scheduled for a retry after 10 ms (5ms * 2^1), and then it is scheduled for a retry after 20 ms (5ms * 2^2). This process continues until the item has reached the desired state, or if the failure continues the interval grows exponentially. In a large scale distributed systems, things are bound to fail, and this auto recover re-try with the exponential backoff, let’s the systems recover, and also not overwhelming it. One of the reasons, we had used this approach was to bring up tenant(customer) resources with terraform, and this auto-recovery mechanism saved our bacon.

When to Implement Your Own Rate Limiter:

Implementing your own rate limiter requires careful design and testing. Ensure it aligns with your specific needs and doesn’t introduce unnecessary complexity.

While libraries provide often basic options, you might need a custom rate limiter if:

Integration with external service: You want to integrate with an external service or monitoring system.

Quota Management with external service: If you had to deal with a third-party service like OpenAI, there is a quota limit on how many requests you can make per second. In cases like this, integrate your rate limiter with a service that manages quota for different users or resources. This allows for centralized control and enforcement of usage limits.

Alerting on excessive requests: Configure your custom rate limiter to trigger alerts if specific resources experience unusually high request rates. This can help identify

Fine-grained control: You require granular control over rate limits based on criteria like resource type or user requests. For instance:

Rate limit by resource type: Different resources, different need. Implement different rate limits for different custom resource based on their resource requirements.

Rate limit by user: Introduce user-specific rate limits to prevent abusive or unfair resource allocation across multiple users. This helps prevent user from hogging all the resource.

Specific algorithm required: Sometimes the default token bucket or exponential back off rate limiter may not cut it. It’s not very common, but you can implement more complex rate limiting algorithms like fixed window algorithm, leaky bucket, etc. for specific use cases.

By understanding the default rate limit behavior, different limiter types, best practices and potential use cases for custom implementations, you can build robust and scalable Kubernetes operators that effectively manager your custom resources without overwhelming the cluster.